Inside Netflix’s 100× Speed Boost: The Maestro Rewrite

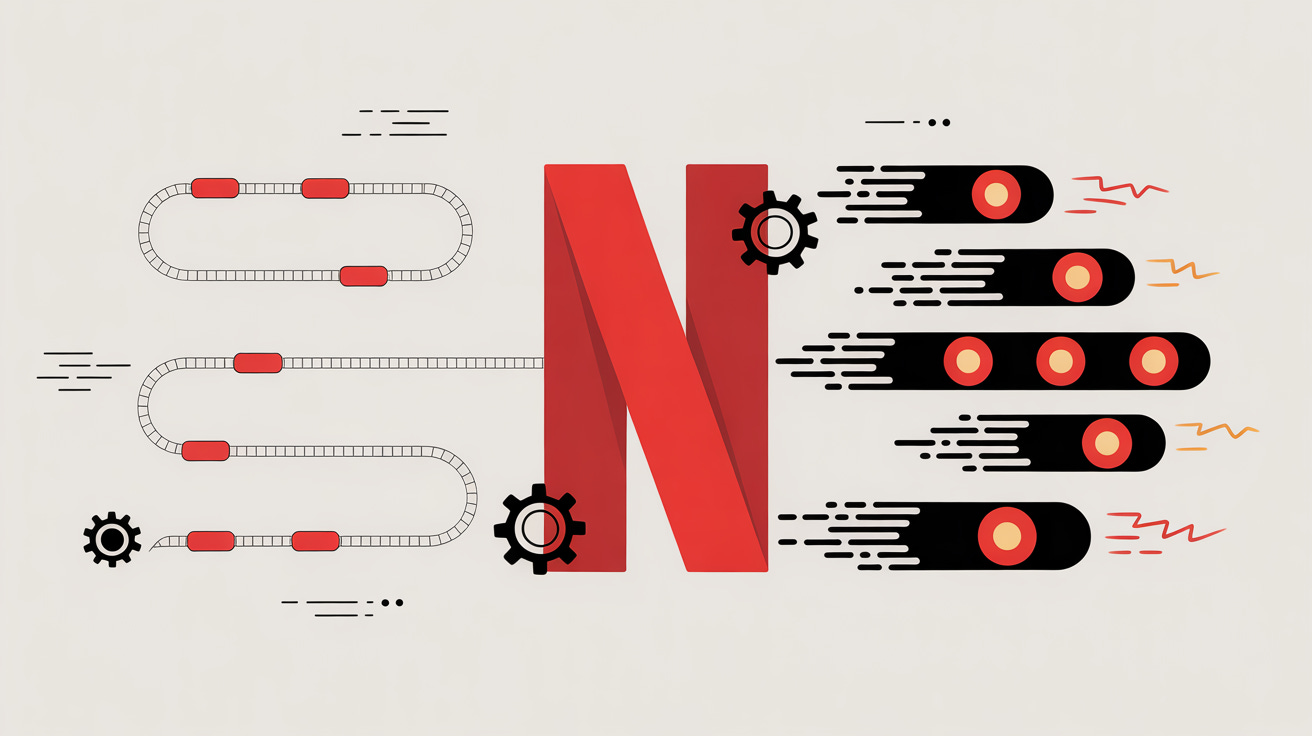

Why Netflix ditched polling and stateless workers to build a lightning-fast, event-driven workflow engine.

Dear readers,

Today, we’re diving into a fascinating story from Netflix’s engineering team, a story that shows how even world-class systems can hit scaling walls when the use case evolves, and how a smart architectural rethink can unlock massive performance gains. In Netflix’s case, the result was a 100× improvement.

In this post, we’ll cover:

The challenge Netflix faced with its Maestro workflow engine, and why tasks that used to run fine started slowing down

The architecture behind the slowdown, including stateless workers, polling queues, and database overhead

The redesign that supercharged Maestro, introducing stateful actors, in-memory workflows, task grouping, and event-driven execution

The results and takeaways, showing how a well-thought-out system design can make even massive workflows snappy

To set the stage, imagine if every task in your system took an extra 5 seconds to start. Not a big deal, right?

Now multiply that by millions of executions a day. That’s the hidden performance tax Netflix uncovered in Maestro. And their solution is a brilliant example of architecture beating brute force.

Reference: Netflix Tech Blog — 100× Faster: How We Supercharged Netflix Maestro’s Workflow Engine

The Problem Netflix Faced

Netflix has a system called Maestro. Think of Maestro as the “task manager” that controls when and how Netflix runs thousands of background jobs — like moving data, training ML models, generating recommendations, and more.

It’s like a conductor of an orchestra, telling each musician (task) when to start, stop, or wait.

For years, Maestro worked perfectly because most jobs ran only once a day or once every few hours. A few seconds of delay didn’t matter.

But then Netflix started using Maestro for real-time use cases — like ads, games, or quick data pipelines that run every few minutes or even seconds. Suddenly, they noticed:

Every task took an extra 5–10 seconds just to start.

That was overhead inside Maestro itself — before the actual work even began. This made fast workflows feel slow and frustrated developers who had to wait on every change.

Why It Was Slow

Originally, Maestro’s design worked like this:

A central system put tasks into a queue (like a to-do list).

Stateless workers (servers with no memory of previous tasks) kept polling the queue:

“Do you have something for me?”

When they got something, they loaded task details from a database, did the work, then wrote results back.

This worked great at scale, but:

Polling every few seconds caused delays.

Repeatedly loading and saving state slowed things further.

Using multiple systems (queues, databases, workers) added more lag.

It’s like every time you wanted to start a task, you had to:

Call your manager

Check your email

Unlock your computer

Then finally start working.

This overhead is fine once a day, but crippling if you’re doing it every minute.

What Netflix Did to Fix It

Netflix didn’t just tweak Maestro, they redesigned its brain for real-time responsiveness.

Here’s how they did it:

1. Stateful Actors: Giving Each Task a “Memory”

Instead of stateless workers constantly asking “what next?”, each workflow or task got its own actor, a lightweight in-memory brain that:

Lives in memory (JVM)

Knows its next steps

Reacts instantly to events

Avoids repetitive database reads

Result: Tasks now start in milliseconds, not seconds.

2. Keep Data in Memory, Sync in Background

They used the database only as a backup, not as the main source for every step.

The real-time info lives in memory, super fast.

Think of it like working on a Google Doc in real-time, but everything is saved in the background. You don’t hit “Save” every time.

3. Group Tasks Logically

They split workflows into “groups” and assigned each group to a specific server.

This way, related tasks stay on the same machine, less shuffling around, faster execution.

And if a server goes down, another can take over the group.

Think of it like assigning each teacher a set of students. If one teacher is sick, another takes over their class. But students don’t keep running between classrooms unnecessarily.

4. Removed the Middleman Queue

Instead of using an external queue where workers kept asking for tasks, they:

Used in-memory queues for instant communication inside the system, and

A small database-backed mechanism to make sure tasks don’t get lost.

Like passing a note directly to a colleague instead of sending an email and waiting for them to check their inbox.

5. Gradual Migration with Real Testing

They built a testing framework to run real production workflows in parallel on the new system to catch bugs.

Then they moved users over gradually.

Like test-driving a new engine before swapping it into the airplane mid-flight.

The Results

The startup delay per task went from 5 seconds → 50 milliseconds.

That’s a 100× speedup.

Developers no longer have to wait between iterations.

Real-time workflows run smoothly.

Maestro remains scalable but is now fast too.

Why This Matters (Even If You’re Not Netflix)

Many engineering teams optimize for scale, but ignore latency inside the system.

Polling, stateless workers, and external queues are simple to build, but add hidden costs.

By shifting to:

Event-driven designs

In-memory state

Actor models

…you can unlock massive speed gains without simply throwing more servers at the problem.

Netflix’s journey is a reminder: sometimes, true performance comes from rethinking architecture, not just optimizing code.

Conclusion

Netflix’s Maestro evolution is more than just a speed hack. It’s a masterclass in system design.

When workloads change, the architecture that once worked well can become the bottleneck. By moving from a centralized, polling-based design to a decentralized, actor-driven model, Netflix made Maestro real-time ready.

For the rest of us, the takeaway is clear:

✅ Don’t underestimate internal latency.

✅ Event-driven + in-memory approaches can deliver big wins.

✅ Architectural redesign can unlock performance leaps that brute force never will.